One of the often overlooked objects in Grand Central Dispatch (GCD) is dispatch_semaphore_t — they smell like legacy and look weird. However, semaphore is a useful tool for managing asynchronous processes. Specifically, use a semaphore to efficently block across dispatch queues. For example, you might want to use a semaphore to limit the number of concurrent network requests.

Background

Semaphores come to us from the late Edsger Dijkstra in the early years of computing. They there two flavours of semaphore — binary and counting. Our dispatch_semaphore_t is, the more powerful, counting semaphore. Therefore, let’s focus on how they work with an analogy.

Core Concept

When you go the DMV there is usually one shared queue. Each attendent has button that can signal when they are available. The queue proceeds with person in the front of the line going to the available attendent. The time it takes each person to met with the attendent varies. Therefore, the order of service preserves the order of enqueue however, the order of completion can vary.

Let’s assume that the queue is outside of the room with the attendants and the signal broke. How would you know when to send the next person into the other room? Watch when a person comes out and send the next person into the room. After that you would know to wait until another person exited the room. If we recorded the results they would look something like this:

| Observed | Change | Now Available |

|---|---|---|

| Exit | +1 | 1 |

| Enter | -1 | 0 |

| Wait | 0 | |

| Exit | +1 | 1 |

| Exit | +1 | 2 |

| Enter | -1 | 1 |

| Enter | -1 | 0 |

| Wait | 0 |

Implementation

A semaphore is an atomic unsigned integer. The signal and wait functions increment and decrement its value, respectivly.

| Function | Value | Returns | Blocks thread? |

|---|---|---|---|

dispatch_semaphore_create(i) |

i |

dispatch_semaphore_t |

Never |

dispatch_semaphore_signal(sema) |

i++ |

Int (non-zero if thread woken) |

Never |

dispatch_semaphore_wait(sema, timeout) |

i-- |

Int (non-zero if wait timed out) |

i == 0 |

When the value is 0, the wait function will block it’s thread until a signal increments the value to 1 and awakens the thread. Then, the wait function will decrement the value (back to 0) and proceed along it’s thread.

The

dispatch_semaphore_create() function always returns nil if you pass a value less than zero. I wrap this method in a function, to make things more Swiftful, that takes a UInt.

Locking for Lightweight Ordering

Let’s pop open a Playground and start with a simple example!

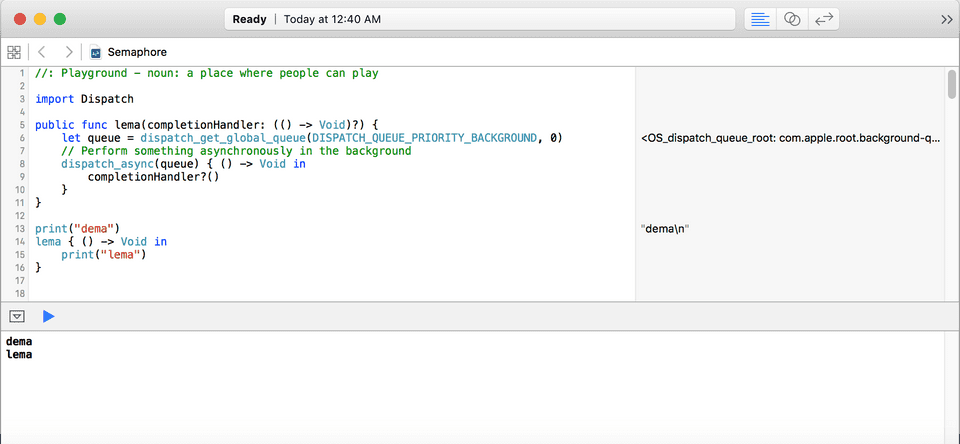

First, suppose that we have lema(), a function with a nullable completion closure (a “block” in Objective-C). I’m using this function because many asynchronous methods in UIKit have a similar form.

public func lema(completionHandler: (() -> Void)?) {

let queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_BACKGROUND, 0)

// Perform something asynchronously in the background

dispatch_async(queue) { () -> Void in

completionHandler?()

}

}

print("dema")

lema { () -> Void in

print("lema")

}The Playground should run and print “dema” and “lema” in the debug log.

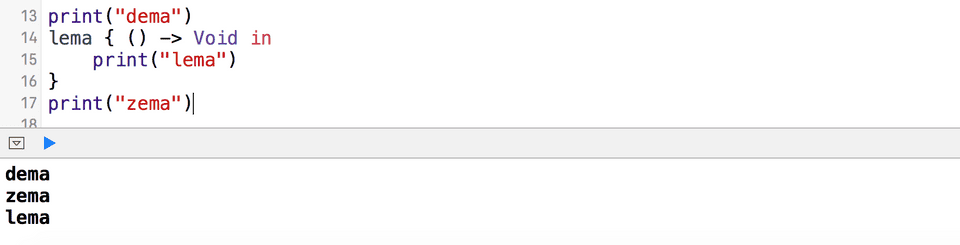

What do you expect to see, in the debug log, if we add print("zema"), on line 17?

Here’s an overview of the execution order:

- Main queue execution started by printing “dema”

dispatch_async()told the runtime to execute it’s closure, asynchronously with thequeue.- The main queue continued serially to printing “zema”

- Later, a background thread executed the closure and called

completionHandler, printing “lema”

Suppose we want to wait for some asynchronous code to complete before proceeding on a thread. We would need some way to tell the main queue thread to stop at right before step 3 and wait for the background queue to execute the closure… Time to break out our new semaphore knowledge!

print("dema")

// Create a semaphore

let sema = dispatch_semaphore_create(0)

lema { () -> Void in

print("lema")

// Increment the semaphore

dispatch_semaphore_signal(sema)

}

// Wait forever until the signal is sent

dispatch_semaphore_wait(sema, DISPATCH_TIME_FOREVER)

print("zema")That was easy! The dispatch_semaphore_wait() blocks the main thread until the semaphore’s signal was sent.

There are some caveats to using the above example in real life:

- This semaphore would cause the UI to freeze becuase it’s blocking the main thread for doing anything until the signal is recieved.

- The

dispatch_group_tAPI syntax is more graceful for completing a series of closures serially. Check out the documentation and try it out for fun!

Synchronize For Speed

The counting aspect of dispatch_semaphore_t is useful for limiting the number of simultanous threads that GCD creates when dispatching asynchronous closures.

let defaultQueue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0)

let MAX_CONCURRENT = 2

let sema = dispatch_semaphore_create(MAX_CONCURRENT)

for index in 1...999 {

dispatch_async(defaultQueue, { () -> Void in

// Intensive task

dispatch_semaphore_signal(sema)

})

dispatch_semaphore_wait(sema, DISPATCH_TIME_FOREVER)

}Here’s an overview of this execution:

- Each time we loop, the semaphore is decremented.

- If the semaphore’s value is

0, it will block the loop’s thread until a signal increments the value. - Once the semaphore’s wait conditions have been met, it is decremented

This pattern is handy when working with highly concurrent memory and processor intensive tasks. It avoids two important issues:

- Performance impact of creating numerous threads that wait for their turn.

- Deadlock when the task is not completely asynchronous

The land of concurrency is highly dependent on the device and nested GCD closures. My heuristic is to start at 2 threads per core. Create performance tests with

measureBlock() and tweak the number for optimal speed. You may find that the number is a bit higher for IO intensive work.

Go forth and Semaphore

Hopefully, this post has illuminated the continued relevance of GCD’s semaphore. I’ve uploaded a gist with idiomatic methods that extend dispatch_semaphore_t. Here’s the previous example rewritten using my Semaphore helper:

| import Dispatch | |

| let defaultQueue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0) | |

| // Initialize count down from 2 | |

| let sema = Semaphore(2) | |

| for index in 1...999 { | |

| dispatch_async(defaultQueue, { () -> Void in | |

| // Intensive task | |

| sema.signal() | |

| }) | |

| sema.wait(DISPATCH_TIME_FOREVER) | |

| } |

Focus on simple code optimization before resorting to GCD. Be mindful of the caveats, if you decide that your sitaution warrants a semaphore. Good luck!

: Important notes, cavaets or heuristics

Update: edited code styling Sept 8, 2020.

on [Unsplash](https://unsplash.com/s/photos/train-light?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText) Photo by [Xavi Cabrera](https://unsplash.com/@xavi_cabrera?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText) on [Unsplash](https://unsplash.com/s/photos/train-light?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText)](/static/c13eb48cc3594801f3905cf24f45932e/6a068/train-crossing.jpg)